Research

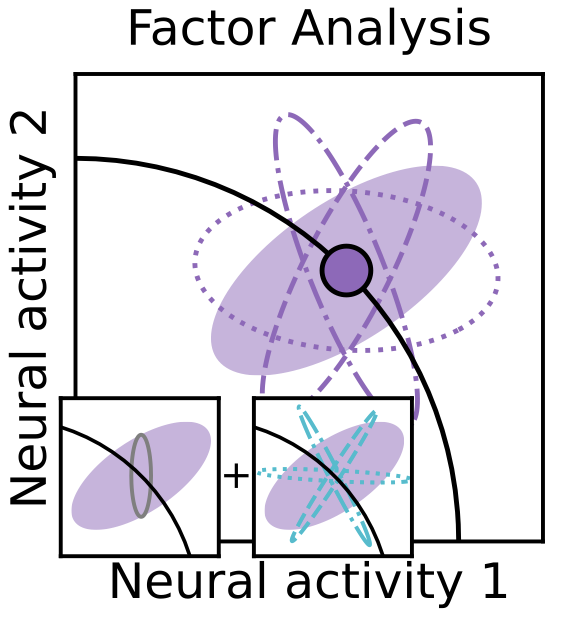

Identifying and Mitigating Statistical Biases in Neural Models of

Tuning and Functional Coupling

In Preparation

P. S. Sachdeva, J. Bak, J. A. Livezey, M. E. Dougherty, S. Bhattacharyya, K. E. Bouchard

tl;dr: We investigated the interpretability of models for neural activity, discovered biases in underconstrained models that may harm interpretability, and developed methods to correct for those biases.

In Preparation

P. S. Sachdeva, J. Bak, J. A. Livezey, M. E. Dougherty, S. Bhattacharyya, K. E. Bouchard

tl;dr: We investigated the interpretability of models for neural activity, discovered biases in underconstrained models that may harm interpretability, and developed methods to correct for those biases.

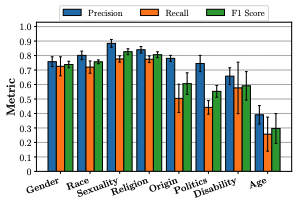

Targeted Identity Group Prediction in Hate Speech Corpora

6th Workshop on Online Abuse and Harms (NAACL 2022)

P. S. Sachdeva, R. Barreto, C. von Vacano, C. J. Kennedy

[paper] [code]

tl;dr: We developed neural network models to predict the identity group(s) targeted by potential hate speech, and assessed their performance in various scenarios.

6th Workshop on Online Abuse and Harms (NAACL 2022)

P. S. Sachdeva, R. Barreto, C. von Vacano, C. J. Kennedy

[paper] [code]

tl;dr: We developed neural network models to predict the identity group(s) targeted by potential hate speech, and assessed their performance in various scenarios.

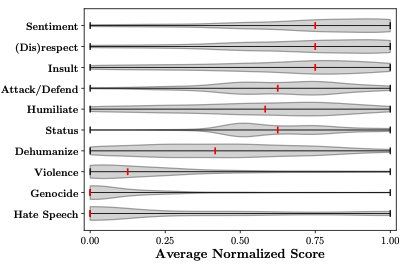

The Measuring Hate Speech Corpus: Leveraging Rasch Measurement Theory for Data Perspectivism

1st Workshop on Perspectivist Approaches to NLP (LREC 2022)

P. S. Sachdeva, R. Barreto, G. Bacon, A. Sahn, C. von Vacano, C. J. Kennedy

[paper] [dataset] [code]

tl;dr: We introduced the Measuring Hate Speech corpus, specificially designed to facilitate the measurement of hate speech using Rasch Measurement Theory.

1st Workshop on Perspectivist Approaches to NLP (LREC 2022)

P. S. Sachdeva, R. Barreto, G. Bacon, A. Sahn, C. von Vacano, C. J. Kennedy

[paper] [dataset] [code]

tl;dr: We introduced the Measuring Hate Speech corpus, specificially designed to facilitate the measurement of hate speech using Rasch Measurement Theory.

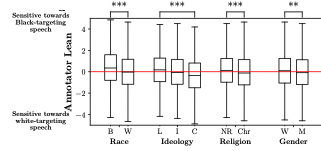

Assessing Annotator Identity Sensitivity via Item Response Theory: A Case Study in a Hate Speech Corpus

2022 ACM Conference on Fairness, Accountability, and Transparency

P. S. Sachdeva, R. Barreto, C. von Vacano, C. J. Kennedy

[paper] [code]

tl;dr: We used techniques from item response theory to characterize annotator identity sensitivity, or how an annotator's identity may impact their rating patterns on NLP tasks, and applied these techniques to annotations in a hate speech corpus.

2022 ACM Conference on Fairness, Accountability, and Transparency

P. S. Sachdeva, R. Barreto, C. von Vacano, C. J. Kennedy

[paper] [code]

tl;dr: We used techniques from item response theory to characterize annotator identity sensitivity, or how an annotator's identity may impact their rating patterns on NLP tasks, and applied these techniques to annotations in a hate speech corpus.

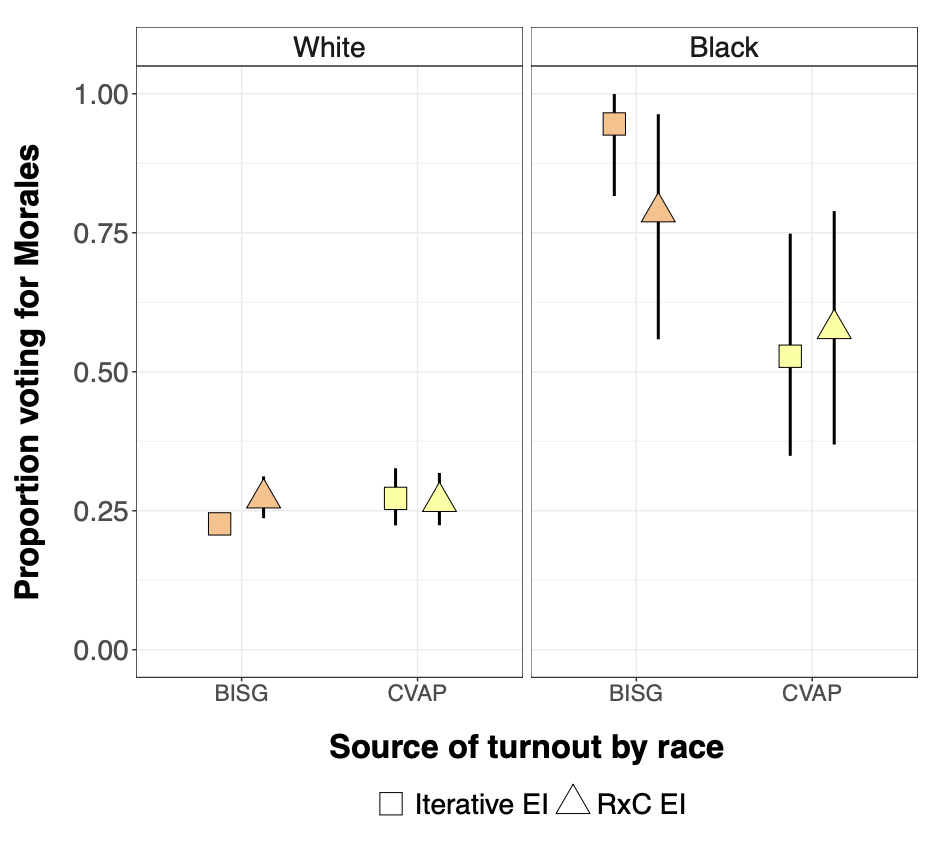

Comparing Methods for Estimating Demographics in Racially Polarized Voting Analyses

Under review at Sociological Methods and Research (SMR)

A. Decter-Frain, P. S. Sachdeva, L. Collingwood, J. Burke, H. Murayama, M. Barreto, S. Henderson, S. A. Wood, J. Zingher

[paper] [code]

tl;dr: We analyzed the conditions under which ecological inference techniques return similar results when provided racial imputation inputs versus traditional inputs.

Under review at Sociological Methods and Research (SMR)

A. Decter-Frain, P. S. Sachdeva, L. Collingwood, J. Burke, H. Murayama, M. Barreto, S. Henderson, S. A. Wood, J. Zingher

[paper] [code]

tl;dr: We analyzed the conditions under which ecological inference techniques return similar results when provided racial imputation inputs versus traditional inputs.

Not optimal, just noisy: the geometry of correlated variability leads to highly suboptimal sensory coding

Under review at Nature Communications

J. A. Livezey, P. S. Sachdeva, M. E. Dougherty, M. T. Summers, K. E. Bouchard

[paper] [code]

tl;dr: We showed that null models traditionally used to assess the coding of neural populations are likely overstating their performance.

Under review at Nature Communications

J. A. Livezey, P. S. Sachdeva, M. E. Dougherty, M. T. Summers, K. E. Bouchard

[paper] [code]

tl;dr: We showed that null models traditionally used to assess the coding of neural populations are likely overstating their performance.

Improved inference in coupling, encoding, and decoding models and its consequence for neuroscientific interpretation

Journal of Neuroscience Methods (2021)

P. S. Sachdeva, J. A. Livezey, M. E. Dougherty, B. M. Gu, J. D. Berke, K. E. Bouchard

[paper] [code]

tl;dr: We showed that improved parametric inference in common neuroscience models drastically reduces the size of the models at no cost to predictive accuracy, thereby changing their neuroscientific interpretation.

Journal of Neuroscience Methods (2021)

P. S. Sachdeva, J. A. Livezey, M. E. Dougherty, B. M. Gu, J. D. Berke, K. E. Bouchard

[paper] [code]

tl;dr: We showed that improved parametric inference in common neuroscience models drastically reduces the size of the models at no cost to predictive accuracy, thereby changing their neuroscientific interpretation.

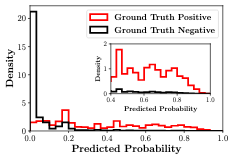

Accurate and Scalable Matching of Translators to Displaced

Persons for Overcoming Language Barriers

ML4D Workshop at Conference on Neural Information Processing Systems (NeurIPS 2020)

D. Agarwal, Y. Baba, P. S. Sachdeva, T. Tandon, T. Vetterli, A. Alghunaim

[paper] [talk]

tl;dr: We showed that a lightweight and scalable logistic regression model could accurately match refugees and asylee seekers to volunteer translators via Tarjimly, the mobile application.

ML4D Workshop at Conference on Neural Information Processing Systems (NeurIPS 2020)

D. Agarwal, Y. Baba, P. S. Sachdeva, T. Tandon, T. Vetterli, A. Alghunaim

[paper] [talk]

tl;dr: We showed that a lightweight and scalable logistic regression model could accurately match refugees and asylee seekers to volunteer translators via Tarjimly, the mobile application.

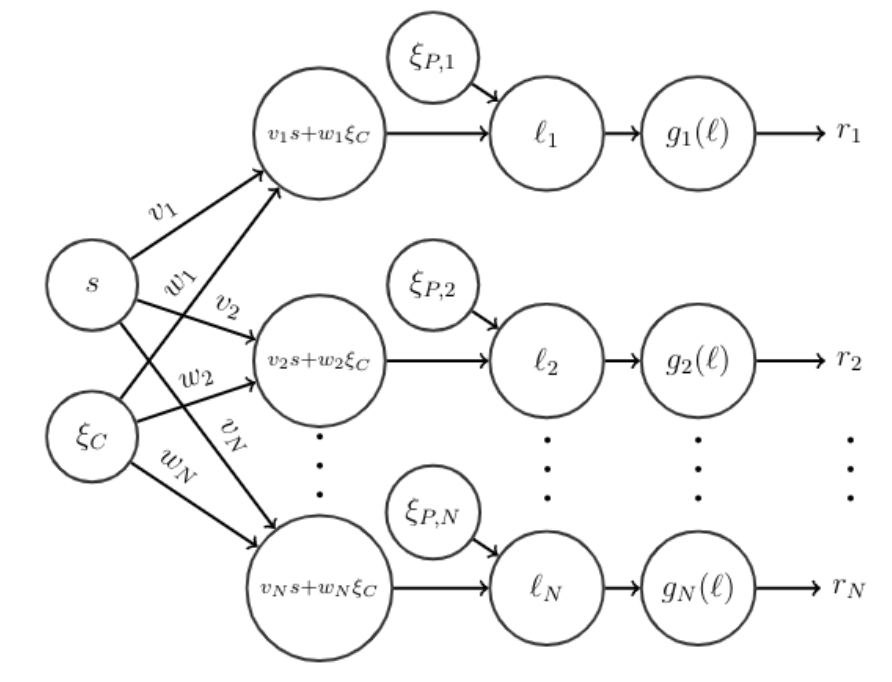

Heterogeneous synaptic weighting improves neural coding in the

presence of common noise

Neural Computation (2020)

P. S. Sachdeva, J. A. Livezey, M. R. DeWeese

[paper] [preprint] [code]

tl;dr: We showed that a neural population coding for a stimulus can overcome a common noise source as long as the population possesses diverse synaptic weights, even if those weights amplify the noise.

Neural Computation (2020)

P. S. Sachdeva, J. A. Livezey, M. R. DeWeese

[paper] [preprint] [code]

tl;dr: We showed that a neural population coding for a stimulus can overcome a common noise source as long as the population possesses diverse synaptic weights, even if those weights amplify the noise.

PyUoI: The Union of Intersections Framework in Python

Journal of Open Source Software (2019)

P. S. Sachdeva, J. A. Livezey, A. J. Tritt, K. E. Bouchard

[paper] [code] [docs]

tl;dr: We developed a Python package that contains implementations for the Union of Intersections, a machine learning framework capable of building highly sparse and predictive models with minimal bias.

Journal of Open Source Software (2019)

P. S. Sachdeva, J. A. Livezey, A. J. Tritt, K. E. Bouchard

[paper] [code] [docs]

tl;dr: We developed a Python package that contains implementations for the Union of Intersections, a machine learning framework capable of building highly sparse and predictive models with minimal bias.

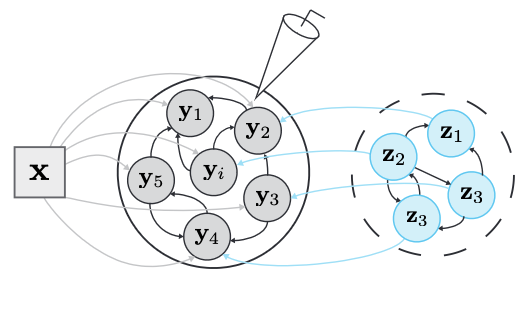

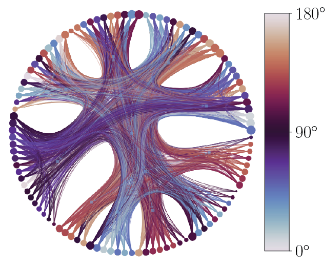

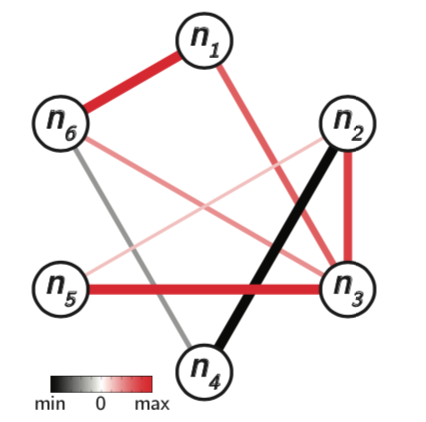

Sparse, Interpretable, and Predictive Functional Connectomics with

UoILasso

IEEE Engineering in Medicine and Biology Society (EMBC 2019)

P. S. Sachdeva, S. Bhattacharyya, K. E. Bouchard

[paper] [slides]

tl;dr: We constructed functional connectivity networks from neural data with fewer edges but equally good predictive performance, compared to standard procedures.

IEEE Engineering in Medicine and Biology Society (EMBC 2019)

P. S. Sachdeva, S. Bhattacharyya, K. E. Bouchard

[paper] [slides]

tl;dr: We constructed functional connectivity networks from neural data with fewer edges but equally good predictive performance, compared to standard procedures.

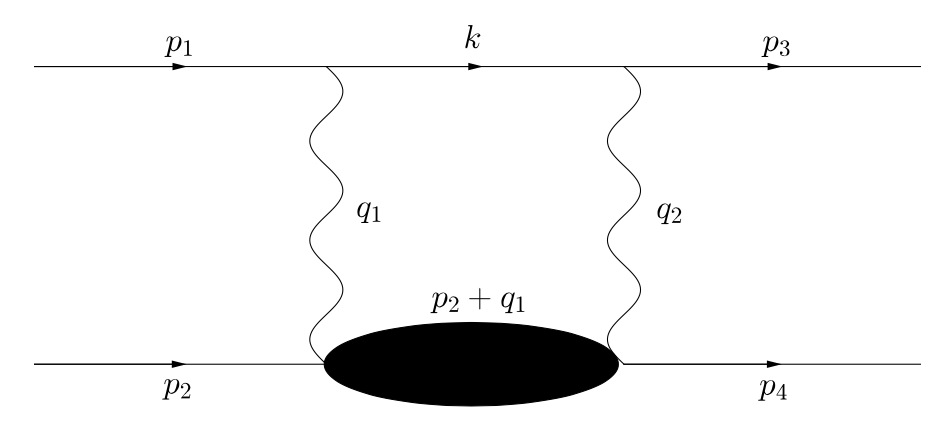

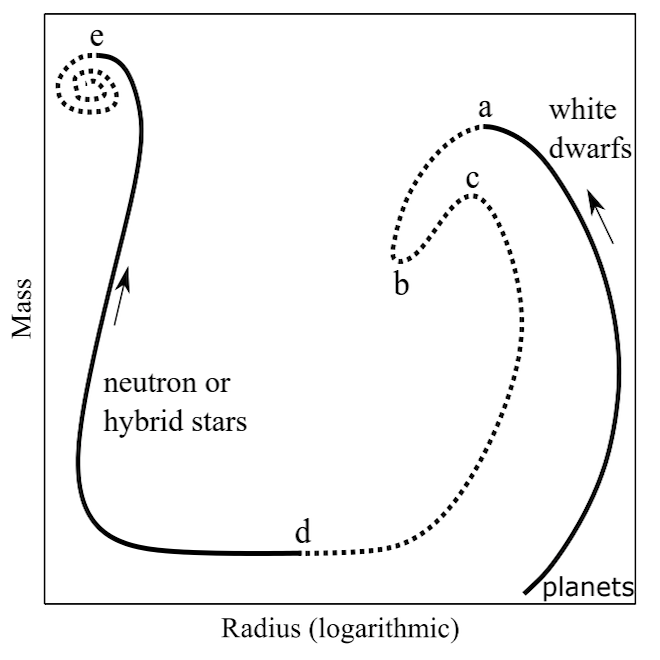

On the Stability of Strange Dwarf Hybrid Stars

The Astrophysical Journal (2017)

M. G. Alford, S. P. Harris, P. S. Sachdeva

[paper] [abstract]

tl;dr: We showed that certain stars, previously thought to be stable, are actually unstable.

The Astrophysical Journal (2017)

M. G. Alford, S. P. Harris, P. S. Sachdeva

[paper] [abstract]

tl;dr: We showed that certain stars, previously thought to be stable, are actually unstable.